Twitter needs to do a better job of explaining how we got duped by Russia

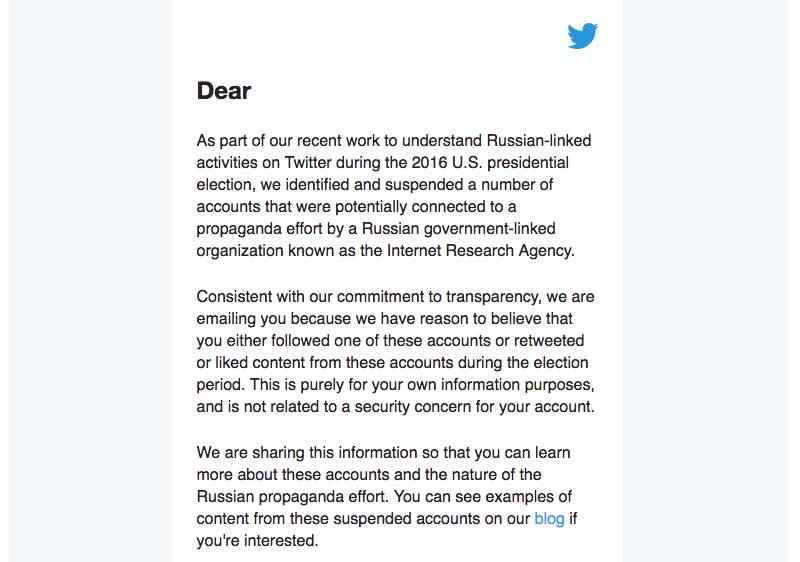

Twitter (TWTR), on Friday, announced that it has begun reaching out to users who followed, retweeted or liked tweets from accounts identified as being connected to Russia’s campaign to influence the 2016 presidential election.

The company, which met with a congressional committee last year to discuss the impact of social media and “fake news” on the election, says the move is meant to further reinforce its commitment to transparency.

Unfortunately, Twitter’s outreach doesn’t fully educate users on how they can avoid interacting with Russia-linked posts, users and other suspect accounts in the future.

What good does this do?

In its emails to users who interacted with accounts linked to the Russian disinformation campaign, Twitter explains that it has reason to believe that the recipient either followed, liked or retweeted a tweet from a Russia-linked account.

The company goes on to explain the user’s account security hasn’t been compromised and that the note is merely meant to show that Twitter is aware of and addressing the issue of fraudulent accounts.

Twitter then provides a link to its blog post detailing its ongoing efforts to identify accounts that posted untrue or misleading tweets related to the Russia’s election meddling and its efforts to snuff them out. The company even provides a few examples of what such tweets looked like, which, look just like clickbait internet ads, and explains that the number of accounts that spread false information was incredibly small compared to its overall user base.

But here’s the thing: Twitter doesn’t tell you anything about the account or tweet that you personally interacted with. The company says that’s because it has already suspended the accounts in question and it can’t disclose any information about them publicly.

That makes sense, sure, but if you want to help educate people about the kinds of accounts to avoid, there’s no better way than showing them the actual posts and accounts they clicked on that were related to Russia’s campaign-meddling initiative.

Moving forward

To its credit, Twitter does have a plan in place to help mitigate the impact of fake accounts, like those used by Russia-linked operatives in the lead up to the 2016 election. For the 2018 elections, the company will attempt to fight imposter accounts for politicians and political parties, monitor trends in the run-up to the elections, improve its machine learning to identify spam accounts and partner with groups that help educate users.

It’s great that Twitter wants to inform users with the help of groups like Common Sense Media and National Association of Media Literacy, but if users can’t see what was wrong with a particular account they interacted with, what good does any of that do?

Facebook (FB), for its part, recently indicated that it will begin polling its own users to help the company develop a means to identify trustworthy news sources. The problem with this, though, is that the people who will be judging these sources, are the same folks who clicked on or shared fraudulent posts to begin with.

Regardless of how much these companies would love to put the issue of disinformation being spread through their services to rest, they both have a long way to go. For now, it seems as though the only true way to cut off such fake posts and accounts is to stop them off before they can do any real damage.

But with such massive networks, Twitter and Facebook may never be able to tame their own creations.

More from Dan:

Email Daniel Howley at [email protected]; follow him on Twitter at @DanielHowley.

Follow Yahoo Finance on Facebook, Twitter, Instagram, and LinkedIn